As autonomous systems take on more responsibility in contested, complex environments, a subtle but critical challenge is emerging—one that can’t be solved by better sensors or faster processors alone.

Trust.

Today’s AI-enabled systems are no longer just tools executing discrete commands. They increasingly function as teammates—making decisions, adapting to conditions, and acting with a degree of independence that fundamentally changes the human operator’s role. But as autonomy matures, trust becomes a double-edged sword. Too little trust and operators over-intervene, negating the benefits of autonomy. Too much trust and they disengage, potentially missing failures or edge cases when human judgment is most needed.

The challenge isn’t whether humans should trust autonomous systems—it’s whether that trust is properly calibrated.

That challenge sits at the heart of the Validated Indicators of Selective Trust in Autonomy (VISTA) project, led by Kairos Research through the Ohio Federal Research Network (OFRN).

When Autonomy Becomes a Teammate

“In many operational contexts, autonomy is already behaving like a teammate rather than a piece of equipment,” said Brad Minnery, CEO of Kairos Research. “But we haven’t given human operators the same kinds of cues they rely on when working with other people—signals that help them decide when to rely, when to question, and when to step in.”

Human teams continuously exchange trust signals—through communication patterns, performance consistency, and contextual awareness. Autonomous systems, by contrast, often operate as black boxes. Operators are expected to supervise multiple vehicles simultaneously, interpret raw telemetry, and infer system health and intent under time pressure.

The result is a growing gap between system capability and human understanding.

VISTA is designed to close that gap.

Understanding How Trust Is Gained—and Lost

At its core, the VISTA project seeks to understand which observable behaviors of an autonomous system cause a human operator to gain or lose trust, and how those trust shifts affect mission performance.

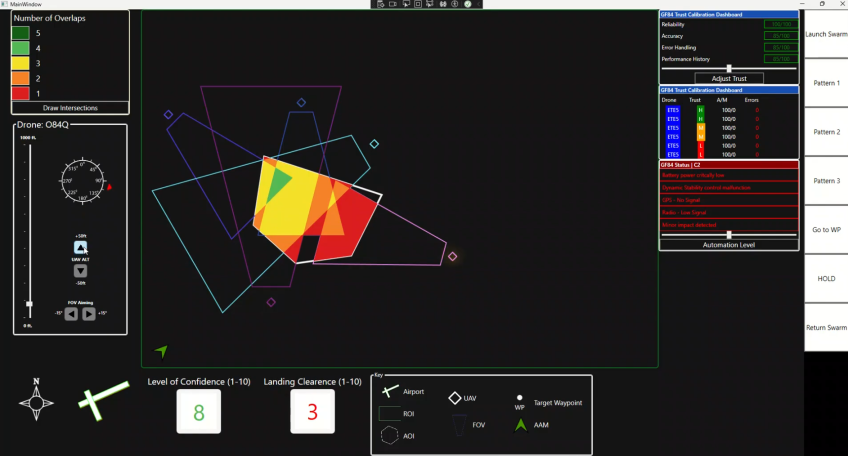

The research focuses on a particularly demanding use case: a single human pilot supervising multiple autonomous drones at once. In these scenarios, cognitive workload is high, attention is limited, and decisions must be made quickly—conditions where miscalibrated trust can have serious consequences.

“Trust isn’t static, and it’s not one-size-fits-all,” said Dr. Rajan Bhattacharyya, Director of Research, Kairos Research, and Co-Principal Investigator on the VISTA project. “It changes based on context, task demands, and how the system behaves over time. Our goal is to identify the indicators that actually matter to operators—and make those indicators visible in a meaningful way.”

Rather than asking pilots to dig through data streams or technical diagnostics, the VISTA team is working toward an interactive, cockpit-ready dashboard that presents at-a-glance indicators of an autonomous system’s trustworthiness. These indicators are intended to help pilots rapidly assess when autonomy is performing as expected—and when human intervention could improve overall team performance.

Designing for the Human in the Loop

VISTA is not about removing humans from the loop. It’s about supporting effective human supervision.

“Good autonomy doesn’t replace the human—it enables better human decision-making,” Minnery said. “We’re focused on selective trust: helping operators know when autonomy is doing exactly what it should, and when it’s time to take a closer look.”

To ensure the research reflects real operational needs, the VISTA team is engaging closely with Air Force Research Laboratory (AFRL) throughout the project. AFRL feedback is informing experimental design, performance metrics, and how insights could translate into future defense aerospace applications.

“This isn’t trust research in the abstract,” Minnery said. “We’re grounding it in the realities of how autonomy is being integrated into Air Force missions today—and where it’s headed next.”

OFRN as a Catalyst for Mission-Aligned Innovation

The VISTA project also highlights the unique role OFRN plays in the autonomy ecosystem.

Managed by Parallax Advanced Research in collaboration with The Ohio State University and funded by the Ohio Department of Higher Education, OFRN is designed to connect Ohio-based startups, universities, and federal partners around applied research problems that matter.

“OFRN exists to shorten the distance between innovative ideas and real mission impact,” said Maj. Gen. (Ret.) Mark Bartman, Executive Director of OFRN. “VISTA is a strong example of how we bring together startups, academic expertise, and federal stakeholders to tackle problems that are technically hard, operationally relevant, and increasingly urgent.”

For VISTA, that ecosystem includes cognitive science researchers at Wright State University, who bring deep expertise in human–machine trust, and Sinclair Community College’s National UAS Training and Certification Center, home to advanced UAS pilot training and simulation infrastructure.

“That combination is powerful,” Bartman said. “You have a company like Kairos Research that understands operational autonomy challenges, academic researchers who study trust scientifically, and training environments that reflect how these systems are actually used. OFRN helps align those pieces around a shared mission.”

Why Trust Calibration Matters Now

As unmanned and autonomous systems proliferate across defense, commercial, and civil applications, the question of trust calibration will only become more consequential.

Autonomy failures don’t always stem from technical breakdowns. Often, they arise from misaligned human expectations—when operators either rely too heavily on systems that aren’t designed for certain conditions or fail to leverage autonomy when it could improve safety and performance.

“Trust is an operational variable,” Bartman said. “If we don’t understand and design for it, we’re leaving capability on the table—or worse, introducing new risks.”

By making trust observable, measurable, and actionable, VISTA points toward a future where human–autonomy teams perform not just efficiently, but intelligently—each member, human and machine, contributing at the right time and in the right way.

For the unmanned systems community, that future may depend less on how autonomous systems think—and more on how well they’re understood.

###

About Parallax Advanced Research & Ohio Aerospace Institute

Parallax Advanced Research is a research institute that tackles global challenges through strategic partnerships with government, industry, and academia. It accelerates innovation, addresses critical global issues, and develops groundbreaking ideas with its partners. With offices in Ohio and Virginia, Parallax aims to deliver new solutions and speed them to market. In 2023, Parallax and the Ohio Aerospace Institute (OAI) formed a collaborative affiliation to drive innovation and technological advancements in Ohio and for the Nation. OAI plays a pivotal role in advancing the aerospace industry in Ohio and the nation by fostering collaborations between universities, aerospace industries, and government organizations and managing aerospace research, education, and workforce development projects.

About the Ohio Federal Research Network

The Ohio Federal Research Network has the mission to stimulate Ohio’s innovation economy by building statewide university-industry research collaborations that meet the requirements of Ohio’s federal laboratories, resulting in the creation of technologies that drive job growth for the State of Ohio. The OFRN is a program managed by Parallax Advanced Research in collaboration with The Ohio State University and is funded by the Ohio Department of Higher Education.